AWS NLB

In this guide you explore how to expose the K8sGateway proxy with an AWS network load balancer (NLB). The following use cases are covered:

- NLB HTTP: Create an HTTP listener on the NLB that exposes an HTTP endpoint on your gateway proxy. Traffic from the NLB to the proxy is not secured.

- TLS passthrough: Expose an HTTPS endpoint of your gateway with an NLB. The NLB passes through HTTPS traffic to the gateway proxy where the traffic is terminated.

Keep in mind the following considerations when working with an NLB:

- K8sGateway does not open any proxy ports until at least one HTTPRoute resource is created that references the gateway. However, AWS ELB health checks are automatically created and run after you create the gateway. Because of that, registered targets might appear unhealthy until an HTTPRoute resource is created.

- An AWS NLB has an idle timeout of 350 seconds that you cannot change. This limitation can increase the number of reset TCP connections.

Before you begin

- Create or use an existing AWS account.

- Follow the Get started guide to install K8sGateway, set up a gateway resource, and deploy the httpbin sample app.

Step 1: Deploy the AWS Load Balancer controller

-

Save the name and region of your AWS EKS cluster and your AWS account ID in environment variables.

export CLUSTER_NAME="<cluster-name>" export REGION="<region>" export AWS_ACCOUNT_ID=<aws-account-ID> export IAM_POLICY_NAME=AWSLoadBalancerControllerIAMPolicyNew export IAM_SA=aws-load-balancer-controller -

Create an AWS IAM policy and bind it to a Kubernetes service account.

# Set up an IAM OIDC provider for a cluster to enable IAM roles for pods eksctl utils associate-iam-oidc-provider \ --region ${REGION} \ --cluster ${CLUSTER_NAME} \ --approve # Fetch the IAM policy that is required for the Kubernetes service account curl -o iam-policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.3/docs/install/iam_policy.json # Create the IAM policy aws iam create-policy \ --policy-name ${IAM_POLICY_NAME} \ --policy-document file://iam-policy.json # Create the Kubernetes service account eksctl create iamserviceaccount \ --cluster=${CLUSTER_NAME} \ --namespace=kube-system \ --name=${IAM_SA} \ --attach-policy-arn=arn:aws:iam::${AWS_ACCOUNT_ID}:policy/${IAM_POLICY_NAME} \ --override-existing-serviceaccounts \ --approve \ --region ${REGION} -

Verify that the service account is created in your cluster.

kubectl -n kube-system get sa aws-load-balancer-controller -o yaml -

Deploy the AWS Load Balancer Controller.

kubectl apply -k "github.com/aws/eks-charts/stable/aws-load-balancer-controller/crds?ref=master" helm repo add eks https://aws.github.io/eks-charts helm repo update helm install aws-load-balancer-controller eks/aws-load-balancer-controller \ -n kube-system \ --set clusterName=${CLUSTER_NAME} \ --set serviceAccount.create=false \ --set serviceAccount.name=${IAM_SA}

Step 2: Deploy your gateway proxy

Depending on the annotations that you use on your gateway proxy, you can configure the NLB in different ways.

Follow these steps to create a simple NLB that accepts HTTP traffic on port 80 and forwards this traffic to the HTTP listener on your gateway proxy.

-

Create a GatewayParameters resource with custom AWS annotations. These annotations instruct the AWS load balancer controller to expose the gateway proxy with a public-facing AWS NLB.

kubectl apply -f- <<EOF apiVersion: gateway.gloo.solo.io/v1alpha1 kind: GatewayParameters metadata: name: custom-gw-params namespace: gloo-system spec: kube: service: extraAnnotations: service.beta.kubernetes.io/aws-load-balancer-type: "external" service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: "instance" EOFSetting Description aws-load-balancer-type: "external"Instruct Kubernetes to pass the Gateway’s service configuration to the AWS load balancer controller that you created earlier instead of using the built-in capabilities in Kubernetes. For more information, see the AWS documentation. aws-load-balancer-scheme: internet-facingCreate the NLB with a public IP addresses that is accessible from the internet. For more information, see the AWS documentation. aws-load-balancer-nlb-target-type: "instance"Use the Gateway’s instance ID to register it as a target with the NLB. For more information, see the AWS documentation. -

Create a Gateway resource that references the custom GatewayParameters resource that you created.

kubectl apply -f- <<EOF kind: Gateway apiVersion: gateway.networking.k8s.io/v1 metadata: name: aws-cloud namespace: gloo-system annotations: gateway.gloo.solo.io/gateway-parameters-name: "custom-gw-params" spec: gatewayClassName: gloo-gateway listeners: - protocol: HTTP port: 80 name: http allowedRoutes: namespaces: from: All EOF -

Verify that your gateway is created.

kubectl get gateway aws-cloud -n gloo-system -

Verify that the gateway service is exposed with an AWS NLB and assigned an AWS hostname.

kubectl get services gloo-proxy-aws-cloud -n gloo-systemExample output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE gloo-proxy-aws-cloud LoadBalancer 172.20.181.57 k8s-gloosyst-glooprox-e11111a111-111a1111aaaa1aa.elb.us-east-2.amazonaws.com 80:30557/TCP 12m -

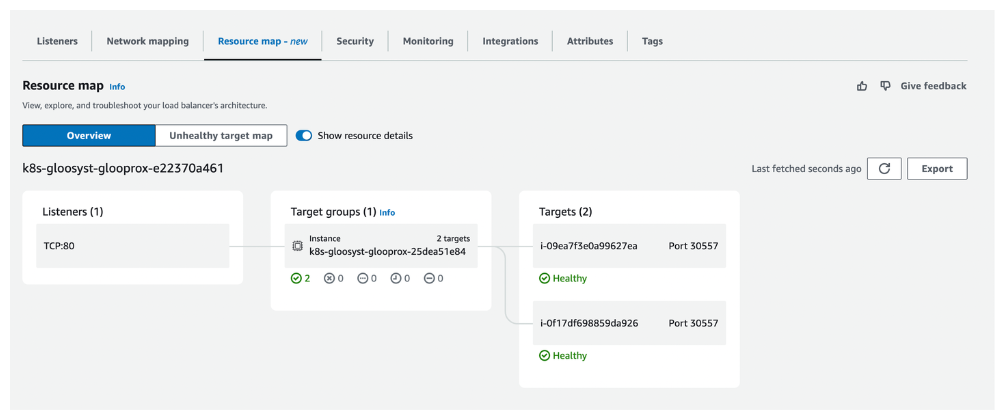

Review the NLB in the AWS EC2 dashboard.

- Go to the AWS EC2 dashboard.

- Go to Load Balancing > Load Balancers, and find and open the load balancer that was created for you.

- On the Resource map tab, verify that the load balancer points to targets in your cluster.

- Continue with Step 3: Test traffic to the NLB.

Pass through HTTPS requests from the AWS NLB to your gateway proxy, and terminate TLS traffic at the gateway proxy for added security.

-

Create a GatewayParameters resource with custom AWS annotations. These annotations instruct the AWS load balancer controller to expose the gateway proxy with a public-facing AWS NLB.

kubectl apply -f- <<EOF apiVersion: gateway.gloo.solo.io/v1alpha1 kind: GatewayParameters metadata: name: custom-gw-params namespace: gloo-system spec: kube: service: extraAnnotations: service.beta.kubernetes.io/aws-load-balancer-type: "external" service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: "instance" EOFSetting Description aws-load-balancer-type: "external"Instruct Kubernetes to pass the Gateway’s service configuration to the AWS load balancer controller that you created earlier instead of using the built-in capabilities in Kubernetes. For more information, see the AWS documentation. aws-load-balancer-scheme: internet-facingCreate the NLB with a public IP addresses and is accessible from the internet. For more information, see the AWS documentation. aws-load-balancer-nlb-target-type: "instance"Use the Gateway’s instance ID to register it as a target with the NLB. For more information, see the AWS documentation. -

Create a self-signed TLS certificate to configure your gateway proxy with an HTTPS listener.

-

Create a directory to store your TLS credentials in.

mkdir example_certs -

Create a self-signed root certificate. The following command creates a root certificate that is valid for a year and can serve any hostname. You use this certificate to sign the server certificate for the gateway later. For other command options, see the OpenSSL docs.

# root cert openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -subj '/O=any domain/CN=*' -keyout example_certs/root.key -out example_certs/root.crt -

Use the root certificate to sign the gateway certificate.

openssl req -out example_certs/gateway.csr -newkey rsa:2048 -nodes -keyout example_certs/gateway.key -subj "/CN=*/O=any domain" openssl x509 -req -sha256 -days 365 -CA example_certs/root.crt -CAkey example_certs/root.key -set_serial 0 -in example_certs/gateway.csr -out example_certs/gateway.crt -

Create a Kubernetes secret to store your server TLS ertificate. You create the secret in the same cluster and namespace that the gateway is deployed to.

kubectl create secret tls -n gloo-system https \ --key example_certs/gateway.key \ --cert example_certs/gateway.crt kubectl label secret https gateway=https --namespace gloo-system

-

-

Create a Gateway with an HTTPS listener that terminates incoming TLS traffic. Make sure to reference the custom GatewayParameters resource and the Kubernetes secret that contains the TLS certificate information.

kubectl apply -f- <<EOF apiVersion: gateway.networking.k8s.io/v1 kind: Gateway metadata: name: aws-cloud namespace: gloo-system labels: gateway: aws-cloud annotations: gateway.gloo.solo.io/gateway-parameters-name: "custom-gw-params" spec: gatewayClassName: gloo-gateway listeners: - name: https port: 443 protocol: HTTPS hostname: https.example.com tls: mode: Terminate certificateRefs: - name: https kind: Secret allowedRoutes: namespaces: from: All EOF -

Verify that your gateway is created.

kubectl get gateway aws-cloud -n gloo-system -

Verify that the gateway service is exposed with an AWS NLB and assigned an AWS hostname.

kubectl get services gloo-proxy-aws-cloud -n gloo-systemExample output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE gloo-proxy-aws-cloud LoadBalancer 172.20.181.57 k8s-gloosyst-glooprox-e11111a111-111a1111aaaa1aa.elb.us-east-2.amazonaws.com 443:30557/TCP 12m -

Review the load balancer in the AWS EC2 dashboard.

- Go to the AWS EC2 dashboard.

- Go to Load Balancing > Load Balancers. Find and open the load balancer that was created for you.

- On the Resource map tab, and verify that the load balancer points to targets in your cluster.

- Continue with Step 3: Test traffic to the NLB.

Step 3: Test traffic to the NLB

-

Create an HTTPRoute resource and associate it with the gateway that you created.

kubectl apply -f- <<EOF apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: httpbin-elb namespace: httpbin labels: example: httpbin-route spec: parentRefs: - name: aws-cloud namespace: gloo-system hostnames: - "www.nlb.com" rules: - backendRefs: - name: httpbin port: 8000 EOF -

Get the AWS hostname of the NLB.

export INGRESS_GW_ADDRESS=$(kubectl get svc -n gloo-system gloo-proxy-aws-cloud -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') echo $INGRESS_GW_ADDRESS -

Send a request to the httpbin app.

curl -vik http://$INGRESS_GW_ADDRESS:80/headers -H "host: www.nlb.com:80" -

Go back to the AWS EC2 dashboard and verify that the NLB health checks now show a

Healthystatus.

-

Create an HTTPRoute resource and associate it with the gateway that you created.

kubectl apply -f- <<EOF apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute metadata: name: httpbin-elb namespace: httpbin labels: example: httpbin-route spec: parentRefs: - name: aws-cloud namespace: gloo-system hostnames: - "https.example.com" rules: - backendRefs: - name: httpbin port: 8000 EOF -

Get the IP address that is associated with the NLB’s AWS hostname.

export INGRESS_GW_HOSTNAME=$(kubectl get svc -n gloo-system gloo-proxy-aws-cloud -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') echo $INGRESS_GW_HOSTNAME export INGRESS_GW_ADDRESS=$(dig +short ${INGRESS_GW_HOSTNAME} | head -1) echo $INGRESS_GW_ADDRESS -

Send a request to the httpbin app. Verify that you see a successful TLS handshake and that you get back a 200 HTTP response code from the httpbin app.

curl -vik --resolve "https.example.com:443:${INGRESS_GW_ADDRESS}" https://https.example.com:443/status/200Example output:

* Hostname https.example.com was found in DNS cache * Trying 3.XX.XXX.XX:443... * Connected to https.example.com (3.XX.XXX.XX) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/cert.pem * CApath: none * TLSv1.2 (OUT), TLS handshake, Client hello (1): * TLSv1.2 (IN), TLS handshake, Server hello (2): * TLSv1.2 (IN), TLS handshake, Certificate (11): * TLSv1.2 (IN), TLS handshake, Server key exchange (12): * TLSv1.2 (IN), TLS handshake, Server finished (14): * TLSv1.2 (OUT), TLS handshake, Client key exchange (16): * TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1): * TLSv1.2 (OUT), TLS handshake, Finished (20): * TLSv1.2 (IN), TLS change cipher, Change cipher spec (1): * TLSv1.2 (IN), TLS handshake, Finished (20): * SSL connection using TLSv1.2 / ECDHE-RSA-CHACHA20-POLY1305 * ALPN, server accepted to use h2 * Server certificate: * subject: CN=*; O=any domain * start date: Jul 23 20:03:48 2024 GMT * expire date: Jul 23 20:03:48 2025 GMT * issuer: O=any domain; CN=* * SSL certificate verify result: unable to get local issuer certificate (20), continuing anyway. * Using HTTP2, server supports multi-use * Connection state changed (HTTP/2 confirmed) * Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0 * Using Stream ID: 1 (easy handle 0x14200fe00) ... < HTTP/2 200 HTTP/2 200

Cleanup

You can remove the resources that you created in this guide.kubectl delete gatewayparameters custom-gw-params -n gloo-system

kubectl delete gateway aws-cloud -n gloo-system

kubectl delete httproute httpbin-elb -n gloo-system

kubectl delete secret tls -n gloo-system